Track Reference#

Definition#

A track is a specification of one or more benchmarking scenarios with a specific document corpus. It defines for example the involved indices or data streams, data files and the operations that are invoked. Its most important attributes are:

One or more indices or data streams, with the former potentially each having one or more types.

The queries to issue.

Source URL of the benchmark data.

A list of steps to run, which we’ll call “challenge”, for example indexing data with a specific number of documents per bulk request or running searches for a defined number of iterations.

Track File Format and Storage#

A track is specified in a JSON file.

Ad-hoc use#

For ad-hoc use you can store a track definition anywhere on the file system and reference it with --track-path, e.g.:

# provide a directory - Rally searches for a track.json file in this directory

# Track name is "app-logs"

esrally race --track-path=~/Projects/tracks/app-logs

# provide a file name - Rally uses this file directly

# Track name is "syslog"

esrally race --track-path=~/Projects/tracks/syslog.json

Rally will also search for additional files like mappings or data files in the provided directory. If you use advanced features like custom runners or parameter sources we recommend that you create a separate directory per track.

Custom Track Repositories#

Alternatively, you can store Rally tracks also in a dedicated git repository which we call a “track repository”. Rally provides a default track repository that is hosted on Github. You can also add your own track repositories although this requires a bit of additional work. First of all, track repositories need to be managed by git. The reason is that Rally can benchmark multiple versions of Elasticsearch and we use git branches in the track repository to determine the best match for each track (based on the command line parameter --distribution-version). The versioning scheme is as follows:

The

masterbranch needs to work with the latestmainbranch of Elasticsearch.All other branches need to match the version scheme of Elasticsearch, i.e.

MAJOR.MINOR.PATCH-SUFFIXwhere all parts exceptMAJORare optional.

When a track repository has several branches, Rally will pick the most appropriate branch, depending on the Elasticsearch version to be benchmarked, using a match logic in the following order:

Exact match major.minor.patch-SUFFIX (e.g.

7.0.0-beta1)Exact match major.minor.patch (e.g.

7.10.2,6.7.0)Exact match major.minor (e.g.

7.10)Nearest prior minor branch

e.g. if available branches are

master,7,7.2and7.11attempting to benchmarking ES7.10.2will pick7.2, whereas benchmarking ES7.12.1will pick branch7.11Nearest major branch

e.g. if available branches are

master,5,6and7, benchmarking ES7.11.0will pick branch7

The following table explains in more detail the ES version compatibility scheme using a scenario where a track repository contains four branches master, 7.0.0-beta1, 7.3 and 6:

track branch |

ES version compatibility |

|---|---|

|

compatible only with the latest development version of Elasticsearch |

|

compatible only with the released version |

|

compatible with all ES |

|

compatible with all Elasticsearch releases with the major release number |

Rally will also search for related files like mappings or custom runners or parameter sources in the track repository. However, Rally will use a separate directory to look for data files (~/.rally/benchmarks/data/$TRACK_NAME/). The reason is simply that we do not want to check-in multi-GB data files into git.

Creating a new track repository#

All track repositories are located in ~/.rally/benchmarks/tracks. If you want to add a dedicated track repository, called private follow these steps:

cd ~/.rally/benchmarks/tracks

mkdir private

cd private

git init

# add your track now

git add .

git commit -m "Initial commit"

If you want to share your tracks with others you need to add a remote and push it:

git remote add origin git@git-repos.acme.com:acme/rally-tracks.git

git push -u origin master

If you have added a remote you should also add it in ~/.rally/rally.ini, otherwise you can skip this step. Open the file in your editor of choice and add the following line in the section tracks:

private.url = <<URL_TO_YOUR_ORIGIN>>

If you specify --track-repository=private, Rally will check whether there is a directory ~/.rally/benchmarks/tracks/private. If there is none, it will use the provided URL to clone the repo. However, if the directory already exists, the property gets ignored and Rally will just update the local tracking branches before the benchmark starts.

You can now verify that everything works by listing all tracks in this track repository:

esrally list tracks --track-repository=private

This shows all tracks that are available on the master branch of this repository. Suppose you only created tracks on the branch 2 because you’re interested in the performance of Elasticsearch 2.x, then you can specify also the distribution version:

esrally list tracks --track-repository=private --distribution-version=7.0.0

Rally will follow the same branch fallback logic as described above.

Adding an already existing track repository#

If you want to add a track repository that already exists, just open ~/.rally/rally.ini in your editor of choice and add the following line in the section tracks:

your_repo_name.url = <<URL_TO_YOUR_ORIGIN>>

After you have added this line, have Rally list the tracks in this repository:

esrally list tracks --track-repository=your_repo_name

When to use what?#

We recommend the following path:

Start with a simple json file. The file name can be arbitrary.

If you need custom runners or parameter sources, create one directory per track. Then you can keep everything that is related to one track in one place. Remember that the track JSON file needs to be named

track.json.If you want to version your tracks so they can work with multiple versions of Elasticsearch (e.g. you are running benchmarks before an upgrade), use a track repository.

Anatomy of a track#

A track JSON file can include the following sections:

In the indices and templates sections you define the relevant indices and index templates. These sections are optional but recommended if you want to create indices and index templates with the help of Rally. The index templates here represent the legacy Elasticsearch index templates which have been deprecated in Elasticsearch 7.9. Users should refer to the composable-templates and component-templates for new tracks.

In the data-streams section you define the relevant data streams. This section is optional but recommended if you want to create or delete data streams with the help of Rally. Data streams will often reference a composable template and require these to be inserted to Elasticsearch first.

In the composable-templates and component-templates sections you define the relevant composable and component templates. Although optional, these will likely be required if data streams are being used.

In the corpora section you define all document corpora (i.e. data files) that Rally should use for this track.

In the operations section you describe which operations are available for this track and how they are parametrized. This section is optional and you can also define any operations directly per challenge. You can use it if you want to share operation definitions between challenges.

In the schedule section you describe the workload for the benchmark, for example index with two clients at maximum throughput while searching with another two clients with ten operations per second. The schedule either uses the operations defined in the operations block or defines the operations to execute inline.

In the challenges section you describe more than one set of operations, in the event your track needs to test more than one set of scenarios. This section is optional, and more information can be found in the challenges section.

Creating a track does not require all of the above sections to be used. Tracks that are used against existing data may only rely on querying operations and can omit the indices, templates, and corpora sections. An example of this can be found in the task with a single track example.

Track elements#

The track elements that are described here are defined in Rally’s JSON schema for tracks. Rally uses this track schema to validate your tracks when it is loading them.

Each track defines the following info attributes:

version(optional): An integer describing the track specification version in use. Rally uses it to detect incompatible future track specification versions and raise an error. See the table below for a reference of valid versions.description(optional): A human-readable description of the track. Although it is optional, we recommend providing it.

Track Specification Version |

Rally version |

|---|---|

1 |

>=0.7.3, <0.10.0 |

2 |

>=0.9.0 |

The version property has been introduced with Rally 0.7.3. Rally versions before 0.7.3 do not recognize this property and thus cannot detect incompatible track specification versions.

Example:

{

"version": 2,

"description": "POIs from Geonames"

}

meta#

For each track, an optional structure, called meta can be defined. You are free which properties this element should contain.

This element can also be defined on the following elements:

challengeoperationtask

If the meta structure contains the same key on different elements, more specific ones will override the same key of more generic elements. The order from generic to most specific is:

track

challenge

operation

task

E.g. a key defined on a task, will override the same key defined on a challenge. All properties defined within the merged meta structure, will get copied into each metrics record.

indices#

The indices section contains a list of all indices that are used by this track. Cannot be used if the data-streams section is specified.

Each index in this list consists of the following properties:

name(mandatory): The name of the index.body(optional): File name of the corresponding index definition that will be used as body in the create index API call.types(optional): A list of type names in this index. Types have been removed in Elasticsearch 7.0.0 so you must not specify this property if you want to benchmark Elasticsearch 7.0.0 or later.

Example:

"indices": [

{

"name": "geonames",

"body": "geonames-index.json",

"types": ["docs"]

}

]

templates#

The templates section contains a list of all index templates that Rally should create.

name(mandatory): Index template name.index-pattern(mandatory): Index pattern that matches the index template. This must match the definition in the index template file.delete-matching-indices(optional, defaults totrue): Delete all indices that match the provided index pattern before start of the benchmark.template(mandatory): Index template file name.

Example:

"templates": [

{

"name": "my-default-index-template",

"index-pattern": "my-index-*",

"delete-matching-indices": true,

"template": "default-template.json"

}

]

data-streams#

The data-streams section contains a list of all data streams that are used by this track. Cannot be used if the indices section is specified.

Each data stream in this list consists of the following properties:

name(mandatory): The name of the data-stream.

Example:

"data-streams": [

{

"name": "my-logging-data-stream"

}

]

composable-templates#

The composable-templates section contains a list of all composable templates that Rally should create. These composable templates will often reference component templates which should also be declared first using the component-templates section.

Each composable template in this list consists of the following properties:

name(mandatory): Composable template name.index-pattern(mandatory): Index pattern that matches the composable template. This must match the definition in the template file.delete-matching-indices(optional, defaults totrue): Delete all indices that match the provided index pattern if the template is deleted. This setting is ignored in Elastic Serverless - please use data streams anddelete-data-streamoperation instead.template(mandatory): Composable template file name.template-path(optional): JSON field inside the file content that contains the template.

Example:

"composable-templates": [

{

"name": "my-default-composable-template",

"index-pattern": "my-index-*",

"delete-matching-indices": true,

"template": "composable-template.json"

}

]

component-templates#

The component-templates section contains a list of all component templates that Rally should create. These component templates will often be referenced by composable templates which can be declared using the composable-templates section.

Each component template in this list consists of the following properties:

name(mandatory): Component template name.template(mandatory): Component template file name.template-path(optional): JSON field inside the file content that contains the template.

Example:

"component-templates": [

{

"name": "my-default-component-template",

"template": "one-shard-template.json"

}

]

corpora#

The corpora section contains all document corpora that are used by this track. Note that you can reuse document corpora across tracks; just copy & paste the respective corpora definitions. It consists of the following properties:

name(mandatory): Name of this document corpus. As this name is also used by Rally in directory names, it is recommended to only use lower-case names without whitespaces for maximum compatibility across file systems.documents(mandatory): A list of documents files.meta(optional): A mapping of arbitrary key-value pairs with additional meta-data for a corpus.

Each entry in the documents list consists of the following properties:

base-url(optional): A http(s), S3 or Google Storage URL that points to the root path where Rally can obtain the corresponding source file.S3 support is optional and can be installed with

python -m pip install esrally[s3].http(s) and Google Storage are supported by default.

Rally can also download data from private S3 or Google Storage buckets if access is properly configured:

S3 according to docs.

Google Storage: Either using client library authentication or by presenting an oauth2 token via the

GOOGLE_AUTH_TOKENenvironment variable, typically done using:export GOOGLE_AUTH_TOKEN=$(gcloud auth print-access-token).

source-format(optional, default:bulk): Defines in which format Rally should interpret the data file specified bysource-file. Currently, onlybulkis supported.source-file(mandatory): File name of the corresponding documents. For local use, this file can be a.jsonfile. If you provide abase-urlwe recommend that you provide a compressed file here. The following extensions are supported:.zip,.bz2,.gz,.tar,.tar.gz,.tgz,.tar.bz2orzst. It must contain exactly one JSON file with the same name. The preferred file extension for our official tracks is.bz2.includes-action-and-meta-data(optional, defaults tofalse): Defines whether the documents file contains already an action and meta-data line (true) or only documents (false).Note

When this is

true, thedocument-countproperty should only reflect the number of documents and not additionally include the number of action and metadata lines.document-count(mandatory): Number of documents in the source file. This number is used by Rally to determine which client indexes which part of the document corpus (each of the N clients gets one N-th of the document corpus). If you are using parent-child, specify the number of parent documents.compressed-bytes(optional but recommended): The size in bytes of the compressed source file. This number is used to show users how much data will be downloaded by Rally and also to check whether the download is complete.uncompressed-bytes(optional but recommended): The size in bytes of the source file after decompression. This number is used by Rally to show users how much disk space the decompressed file will need and to check that the whole file could be decompressed successfully.target-index: Defines the name of the index which should be targeted for bulk operations. Rally will automatically derive this value if you have defined exactly one index in theindicessection. Ignored ifincludes-action-and-meta-dataistrue.target-type(optional): Defines the name of the document type which should be targeted for bulk operations. Rally will automatically derive this value if you have defined exactly one index in theindicessection and this index has exactly one type. Ignored ifincludes-action-and-meta-dataistrueor if atarget-data-streamis specified. Types have been removed in Elasticsearch 7.0.0 so you must not specify this property if you want to benchmark Elasticsearch 7.0.0 or later.target-data-stream: Defines the name of the data stream which should be targeted for bulk operations. Rally will automatically derive this value if you have defined exactly one index in thedata-streamssection. Ignored ifincludes-action-and-meta-dataistrue.meta(optional): A mapping of arbitrary key-value pairs with additional meta-data for a source file.

To avoid repetition, you can specify default values on document corpus level for the following properties:

base-urlsource-formatincludes-action-and-meta-datatarget-indextarget-typetarget-data-stream

Examples

Here we define a single document corpus with one set of documents:

"corpora": [

{

"name": "geonames",

"documents": [

{

"base-url": "http://benchmarks.elasticsearch.org.s3.amazonaws.com/corpora/geonames",

"source-file": "documents.json.bz2",

"document-count": 11396505,

"compressed-bytes": 264698741,

"uncompressed-bytes": 3547614383,

"target-index": "geonames",

"target-type": "docs"

}

]

}

]

Here we define a single document corpus with one set of documents using data streams instead of indices:

"corpora": [

{

"name": "http_logs",

"documents": [

{

"base-url": "http://benchmarks.elasticsearch.org.s3.amazonaws.com/corpora/http_logs",

"source-file": "documents-181998.json.bz2",

"document-count": 2708746,

"target-data-stream": "my-logging-data-stream"

}

]

}

]

We can also define default values on document corpus level but override some of them (base-url for the last entry):

"corpora": [

{

"name": "http_logs",

"base-url": "http://benchmarks.elasticsearch.org.s3.amazonaws.com/corpora/http_logs",

"target-type": "docs",

"documents": [

{

"source-file": "documents-181998.json.bz2",

"document-count": 2708746,

"target-index": "logs-181998"

},

{

"source-file": "documents-191998.json.bz2",

"document-count": 9697882,

"target-index": "logs-191998"

},

{

"base-url": "http://example.org/corpora/http_logs",

"source-file": "documents-201998.json.bz2",

"document-count": 13053463,

"target-index": "logs-201998"

}

]

}

]

challenge#

If your track defines only one benchmarking scenario specify the schedule on top-level. Use the challenge element if you want to specify additional properties like a name or a description. You can think of a challenge as a benchmarking scenario. If you have multiple challenges, you can define an array of challenges.

This section contains one or more challenges which describe the benchmark scenarios for this data set. A challenge can reference all operations that are defined in the operations section.

Each challenge consists of the following properties:

name(mandatory): A descriptive name of the challenge. Should not contain spaces in order to simplify handling on the command line for users.description(optional): A human readable description of the challenge.user-info(optional): A message that is printed at the beginning of a race. It is intended to be used to notify e.g. about deprecations.default(optional): If true, Rally selects this challenge by default if the user did not specify a challenge on the command line. If your track only defines one challenge, it is implicitly selected as default, otherwise you need to define"default": trueon exactly one challenge.schedule(mandatory): Defines the workload. It is described in more detail below.

Note

You should strive to minimize the number of challenges. If you just want to run a subset of the tasks in a challenge, use task filtering.

schedule#

The schedule element contains a list of tasks that are executed by Rally, i.e. it describes the workload. Each task consists of the following properties:

name(optional): This property defines an explicit name for the given task. By default the operation’s name is implicitly used as the task name but if the same operation is run multiple times, a unique task name must be specified using this property.tags(optional): This property defines one or more tags for the given task. This can be used for task filtering, e.g. with--exclude-tasks="tag:setup"all tasks except the ones that contain the tagsetupare executed.operation(mandatory): This property refers either to the name of an operation that has been defined in theoperationssection or directly defines an operation inline.clients(optional, defaults to 1): The number of clients that should execute a task concurrently.warmup-iterations(optional, defaults to 0): Number of iterations that each client should execute to warmup the benchmark candidate. Warmup iterations will not show up in the measurement results.iterations(optional, defaults to 1): Number of measurement iterations that each client executes. The command line report will automatically adjust the percentile numbers based on this number (i.e. if you just run 5 iterations you will not get a 99.9th percentile because we need at least 1000 iterations to determine this value precisely).ramp-up-time-period(optional, defaults to 0): Rally will start clients gradually. It reaches the number specified byclientsat the end of the specified time period in seconds. This property requireswarmup-time-periodto be set as well, which must be greater than or equal to the ramp-up time. See the section on ramp-up for more details.warmup-time-period(optional, defaults to 0): A time period in seconds that Rally considers for warmup of the benchmark candidate. All response data captured during warmup will not show up in the measurement results.time-period(optional): A time period in seconds that Rally considers for measurement. Note that for bulk indexing you should usually not define this time period. Rally will just bulk index all documents and consider every sample after the warmup time period as measurement sample.schedule(optional, defaults todeterministic): Defines the schedule for this task, i.e. it defines at which point in time during the benchmark an operation should be executed. For example, if you specify adeterministicschedule and a target-interval of 5 (seconds), Rally will attempt to execute the corresponding operation at second 0, 5, 10, 15 … . Out of the box, Rally supportsdeterministicandpoissonbut you can define your own custom schedules.target-throughput(optional): Defines the benchmark mode. If it is not defined, Rally assumes this is a throughput benchmark and will run the task as fast as it can. This is mostly needed for batch-style operations where it is more important to achieve the best throughput instead of an acceptable latency. If it is defined, it specifies the number of requests per second over all clients. E.g. if you specifytarget-throughput: 1000with 8 clients, it means that each client will issue 125 (= 1000 / 8) requests per second. In total, all clients will issue 1000 requests each second. If Rally reports less than the specified throughput then Elasticsearch simply cannot reach it.target-interval(optional): This is just1 / target-throughput(in seconds) and may be more convenient for cases where the throughput is less than one operation per second. Define eithertarget-throughputortarget-intervalbut not both (otherwise Rally will raise an error).ignore-response-error-level(optional): Controls whether to ignore errors encountered during task execution when the benchmark is run with on-error=abort. The only allowable value isnon-fatalwhich, combined with the cli option--on-error=abort, will ignore non-fatal errors during the execution of the task.run-on-serverless(optional, default to unset): By default, Rally skips operations that are not supported in Elastic Serverless, such as node-stats. Setting this option totrueorfalsewill override that detection.Note

Consult the docs on the cli option on-error for a definition of fatal errors.

Defining operations#

In the following snippet we define two operations force-merge and a match-all query separately in an operations block:

{

"operations": [

{

"name": "force-merge",

"operation-type": "force-merge"

},

{

"name": "match-all-query",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

}

}

],

"schedule": [

{

"operation": "force-merge",

"clients": 1

},

{

"operation": "match-all-query",

"clients": 4,

"warmup-iterations": 1000,

"iterations": 1000,

"target-throughput": 100

}

]

}

If we do not want to reuse these operations, we can also define them inline. Note that the operations section is gone:

{

"schedule": [

{

"operation": {

"name": "force-merge",

"operation-type": "force-merge"

},

"clients": 1

},

{

"operation": {

"name": "match-all-query",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

}

},

"clients": 4,

"warmup-iterations": 1000,

"iterations": 1000,

"target-throughput": 100

}

]

}

Contrary to the query, the force-merge operation does not take any parameters, so Rally allows us to just specify the operation-type for this operation. Its name will be the same as the operation’s type:

{

"schedule": [

{

"operation": "force-merge",

"clients": 1

},

{

"operation": {

"name": "match-all-query",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

}

},

"clients": 4,

"warmup-iterations": 1000,

"iterations": 1000,

"target-throughput": 100

}

]

}

Choosing a schedule#

Rally allows you to choose between the following schedules to simulate traffic:

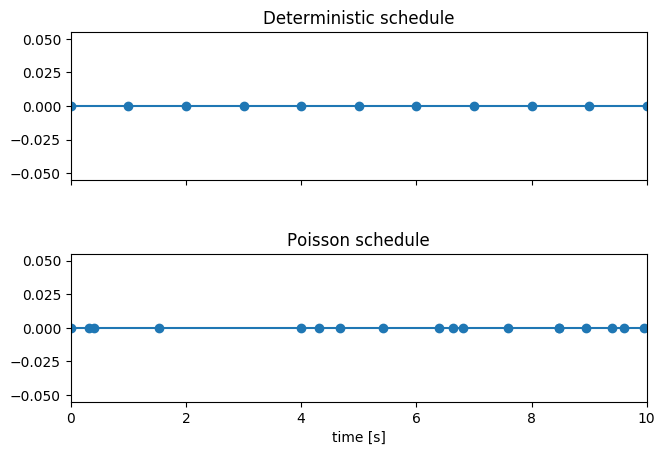

The diagram below shows how different schedules in Rally behave during the first ten seconds of a benchmark. Each schedule is configured for a (mean) target throughput of one operation per second.

If you want as much reproducibility as possible you can choose the deterministic schedule. A Poisson distribution models random independent arrivals of clients which on average match the expected arrival rate which makes it suitable for modelling the behaviour of multiple clients that decide independently when to issue a request. For this reason, Poisson processes play an important role in queueing theory.

If you have more complex needs on how to model traffic, you can also implement a custom schedule.

Ramp-up load#

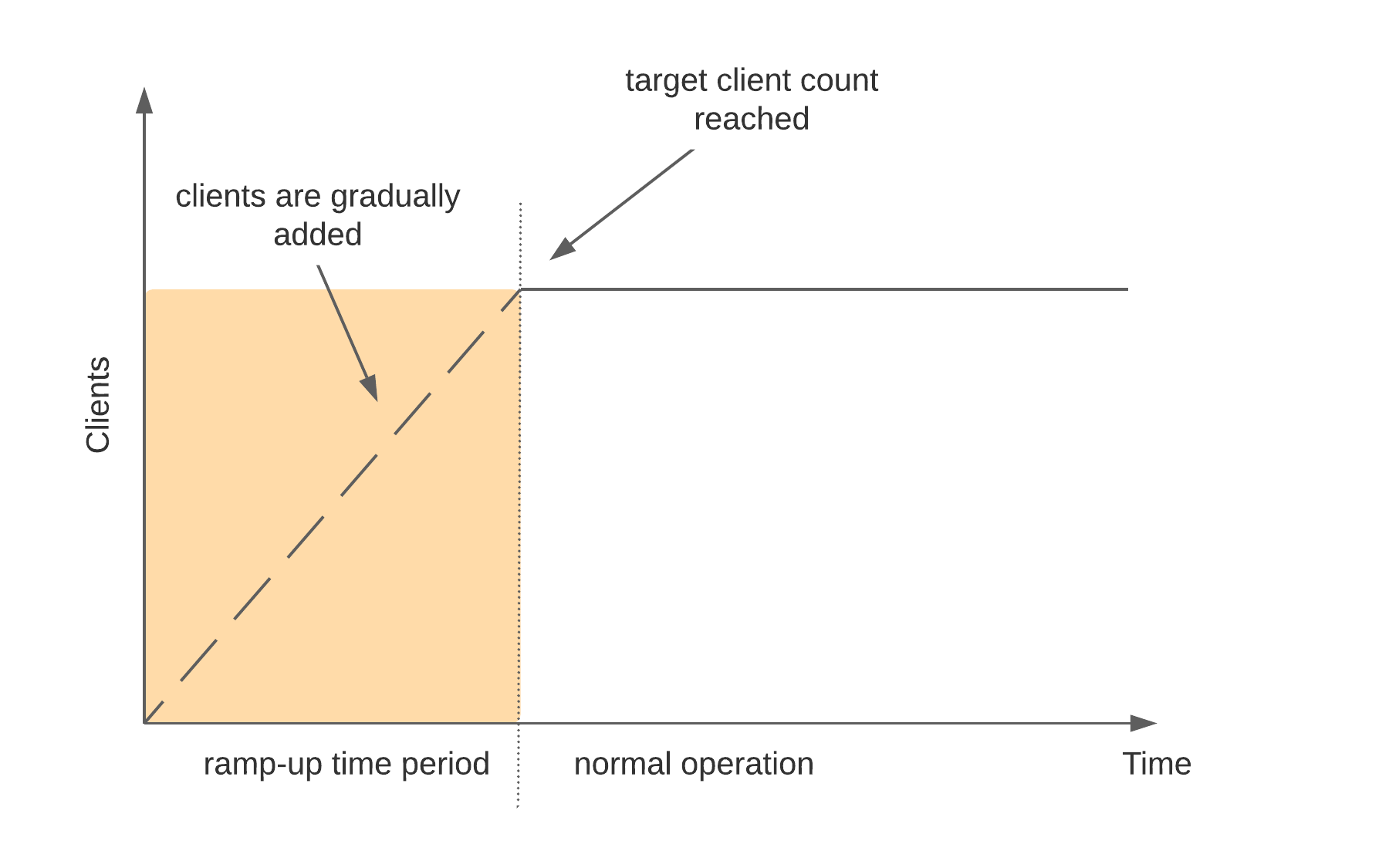

For benchmarks involving many clients it can be useful to increase load gradually. This avoids load spikes at the beginning of a benchmark when Elasticsearch is not yet warmed up. Rally will gradually add more clients over time but each client will already attempt to reach its specified target throughput. The diagram below shows how clients are added over time:

Time-based vs. iteration-based#

You should usually use time periods for batch style operations and iterations for the rest. However, you can also choose to run a query for a certain time period.

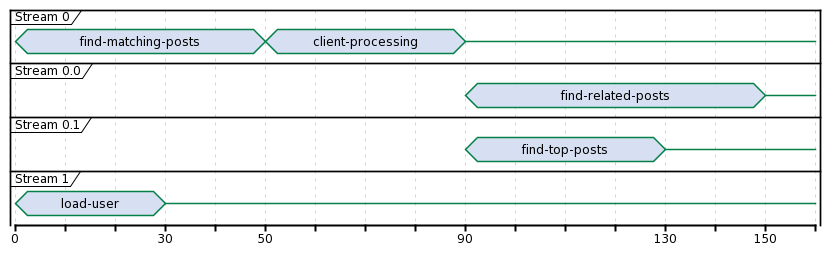

All tasks in the schedule list are executed sequentially in the order in which they have been defined. However, it is also possible to execute multiple tasks concurrently, by wrapping them in a parallel element. The parallel element defines of the following properties:

clients(optional): The number of clients that should execute the provided tasks. If you specify this property, Rally will only use as many clients as you have defined on theparallelelement (see examples)!ramp-up-time-period(optional, defaults to 0): The time-period in seconds across all nested tasks to spend in ramp-up. If this property is defined here, it cannot be overridden in nested tasks. This property requireswarmup-time-periodto be set as well, which must be greater than or equal to the ramp-up time. See the section on ramp-up for more details.warmup-time-period(optional, defaults to 0): Allows to define a default value for all tasks of theparallelelement.time-period(optional, no default value if not specified): Allows to define a default value for all tasks of theparallelelement.warmup-iterations(optional, defaults to 0): Allows to define a default value for all tasks of theparallelelement.iterations(optional, defaults to 1): Allows to define a default value for all tasks of theparallelelement.completed-by(optional): Allows to define the name of one task in thetaskslist, or the valueany. If a specific task name has been provided then as soon as the named task has completed, the wholeparalleltask structure is considered completed. If the valueanyis provided, then any task that is first to complete will render theparallelstructure complete. If this property is not explicitly defined, theparalleltask structure is considered completed as soon as all its subtasks have completed (NOTE: this is _not_ true ifanyis specified, see below warning and example).tasks(mandatory): Defines a list of tasks that should be executed concurrently. Each task in the list can define the following properties that have been defined above:clients,warmup-time-period,time-period,warmup-iterationsanditerations.

Note

parallel elements cannot be nested.

Warning

Specify the number of clients on each task separately. If you specify this number on the parallel element instead, Rally will only use that many clients in total and you will only want to use this behavior in very rare cases (see examples)!

Warning

If the value any is provided for the completed-by parameter, the first completion of a client task in the parallel block will cause all remaining clients and tasks within the parallel block to immediately exit without waiting for completion.

For example, given the below track:

Both

bulk-task-1andbulk-task-2execute in parallel

bulk-task-1Client 1/8 is first to complete its assigned partition of work

bulk-task-1will now cause theparalleltask to complete and not wait for either the remaining sevenbulk-task-1’s clients to complete, or for any ofbulk-task-2’s clients to complete

{

"name": "parallel-any",

"description": "Track completed-by property",

"schedule": [

{

"parallel": {

"completed-by": "any",

"tasks": [

{

"name": "bulk-task-1",

"operation": {

"operation-type": "bulk",

"bulk-size": 1000

},

"clients": 8

},

{

"name": "bulk-task-2",

"operation": {

"operation-type": "bulk",

"bulk-size": 500

},

"clients": 8

}

]

}

}

]

}

operations#

The operations section contains a list of all operations that are available when specifying a schedule. Operations define the static properties of a request against Elasticsearch whereas the schedule element defines the dynamic properties (such as the target throughput).

Each operation consists of the following properties:

name(mandatory): The name of this operation. You can choose this name freely. It is only needed to reference the operation when defining schedules.operation-type(mandatory): Type of this operation. See below for the operation types that are supported out of the box in Rally. You can also add arbitrary operations by defining custom runners.include-in-reporting(optional, defaults totruefor normal operations and tofalsefor administrative operations): Whether or not this operation should be included in the command line report. For example you might want Rally to create an index for you but you are not interested in detailed metrics about it. Note that Rally will still record all metrics in the metrics store.assertions(optional, defaults toNone): A list of assertions that should be checked. See below for more details.request-timeout(optional, defaults toNone): The client-side timeout for this operation. Represented as a floating-point number in seconds, e.g.1.5.headers(optional, defaults toNone): A dictionary of key-value pairs to pass as headers in the operation.opaque-id(optional, defaults toNone[unused]): A special ID set as the value ofx-opaque-idin the client headers of the operation. Overrides existingx-opaque-identries inheaders(case-insensitive).

Assertions

Use assertions for sanity checks, e.g. to ensure a query returns results. Assertions need to be defined with the following properties. All of them are mandatory:

property: A dot-delimited path to the meta-data field to be checked. Only meta-data fields that are returned by an operation are supported. See the respective “meta-data” section of an operation for the supported meta-data.condition: The following conditions are supported:<,<=,==,>=,>.value: The expected value.

Assertions are disabled by default and can be enabled with the command line flag --enable-assertions. A failing assertion aborts the benchmark.

Example:

{

"name": "term",

"operation-type": "search",

"detailed-results": true,

"assertions": [

{

"property": "hits",

"condition": ">",

"value": 0

}

],

"body": {

"query": {

"term": {

"country_code.raw": "AT"

}

}

}

}

Note

This requires to set detailed-results to true so the search operation gathers additional meta-data, such as the number of hits.

If assertions are enabled with --enable-assertions and this assertion fails, it exits with the following error message:

[ERROR] Cannot race. Error in load generator [0]

Cannot run task [term]: Expected [hits] to be > [0] but was [0].

Retries

Some of the operations below are also retryable (marked accordingly below). Retryable operations expose the following properties:

retries(optional, defaults to 0): The number of times the operation is retried.retry-until-success(optional, defaults tofalse): Retries until the operation returns a success. This will also forcibly setretry-on-errortotrue.retry-wait-period(optional, defaults to 0.5): The time in seconds to wait between retry attempts.retry-on-timeout(optional, defaults totrue): Whether to retry on connection timeout.retry-on-error(optional, defaults tofalse): Whether to retry on errors (e.g. when an index could not be deleted).

Depending on the operation type a couple of further parameters can be specified.

bulk#

With the operation type bulk you can execute bulk requests.

Properties#

bulk-size(mandatory): Defines the bulk size in number of documents.ingest-percentage(optional, defaults to 100): A number between (0, 100] that defines how much of the document corpus will be bulk-indexed.corpora(optional): A list of document corpus names that should be targeted by this bulk-index operation. Only needed if thecorporasection contains more than one document corpus and you don’t want to index all of them with this operation.indices(optional): A list of index names that defines which indices should be used by this bulk-index operation. Rally will then only select the documents files that have a matchingtarget-indexspecified.batch-size(optional): Defines how many documents Rally will read at once. This is an expert setting and only meant to avoid accidental bottlenecks for very small bulk sizes (e.g. if you want to benchmark with a bulk-size of 1, you should setbatch-sizehigher).pipeline(optional): Defines the name of an (existing) ingest pipeline that should be used.conflicts(optional): Type of index conflicts to simulate. If not specified, no conflicts will be simulated (also read below on how to use external index ids with no conflicts). Valid values are: ‘sequential’ (A document id is replaced with a document id with a sequentially increasing id), ‘random’ (A document id is replaced with a document id with a random other id).conflict-probability(optional, defaults to 25 percent): A number between [0, 100] that defines how many of the documents will get replaced. Combiningconflicts=sequentialandconflict-probability=0makes Rally generate index ids by itself, instead of relying on Elasticsearch’s automatic id generation.on-conflict(optional, defaults toindex): Determines whether Rally should use the actionindexorupdateon id conflicts.recency(optional, defaults to 0): A number between [0,1] indicating whether to bias conflicting ids towards more recent ids (recencytowards 1) or whether to consider all ids for id conflicts (recencytowards 0). See the diagram below for details.detailed-results(optional, defaults tofalse): Records more detailed meta-data for bulk requests. As it analyzes the corresponding bulk response in more detail, this might incur additional overhead which can skew measurement results. See the section below for the meta-data that are returned. This property must be set totruefor individual bulk request failures to be logged by Rally.timeout(optional, defaults to1m): Defines the time period that Elasticsearch will wait per action until it has finished processing the following operations: automatic index creation, dynamic mapping updates, waiting for active shards.refresh(optional): Control Elasticsearch refresh behavior for bulk requests via therefreshbulk API query parameter. Valid values aretrue,wait_for, andfalse. Parameter values are specified as a string. Iftrue, Elasticsearch will refresh target shards in the background. Ifwait_for, Elasticsearch blocks bulk requests until affected shards have been refreshed. Iffalse, Elasticsearch will use the default refresh behavior.looped(optional, defaults tofalse): If set totrue,bulkoperation will continue from the beginning upon completing the corpus. This option should be combined withtime-periodoriterationproperties at the task level, otherwise Rally will never finish the task.

With multiple clients, Rally will split each document using as many splits as there are clients. This ensures that the bulk index operations are efficiently parallelized but has the drawback that the ingestion is not done in the order of each document. For example, if clients is set to 2, one client will index the document starting from the beginning, while the other will index starting from the middle.

Additionally, if there are multiple documents or corpora, Rally will try to index all documents in parallel in two ways:

Each client will have a different starting point. For example, in a track with 2 corpora and 5 clients, clients 1, 3, and 5 will start with the first corpus and clients 2 and 4 will start with the second corpus.

Each client is assigned to multiple documents. Client 1 will start with the first split of the first document of the first corpus. Then it will move on to the first split of the first document of the second corpus, and so on.

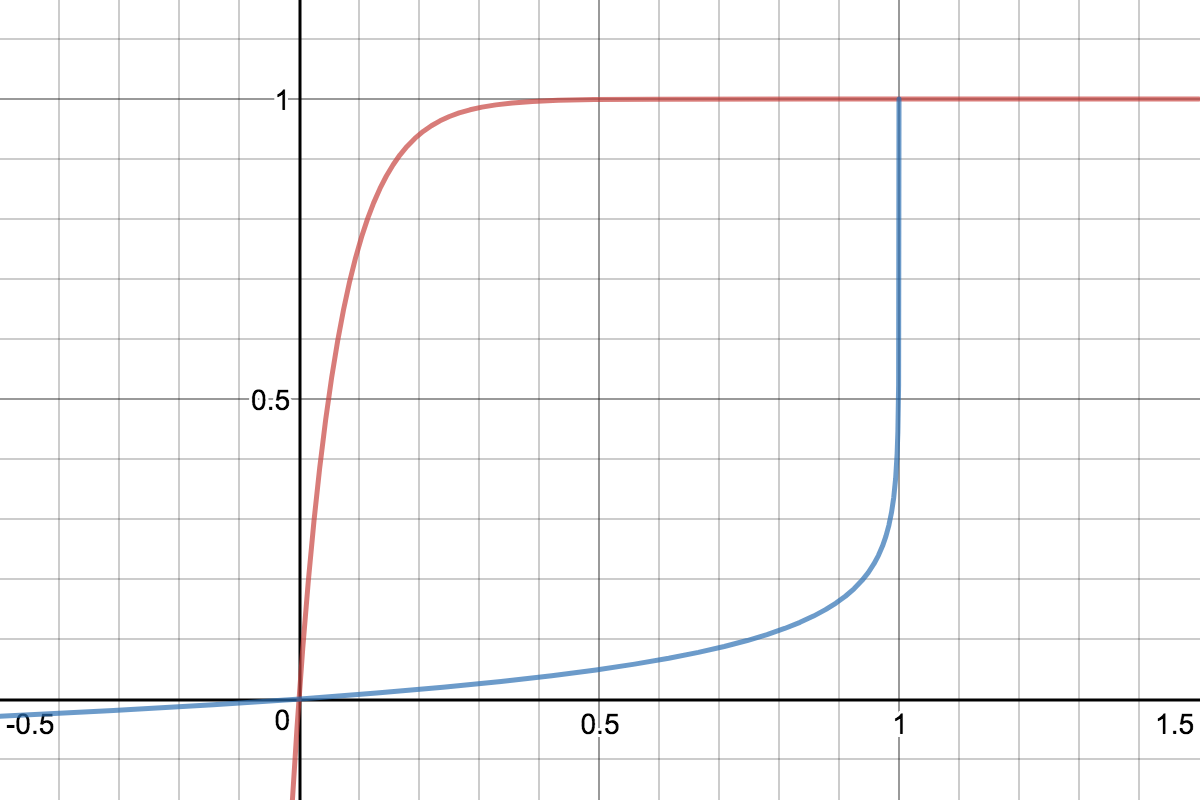

The image below shows how Rally behaves with a recency set to 0.5. Internally, Rally uses the blue function for its calculations but to understand the behavior we will focus on red function (which is just the inverse). Suppose we have already generated ids from 1 to 100 and we are about to simulate an id conflict. Rally will randomly choose a value on the y-axis, e.g. 0.8 which is mapped to 0.1 on the x-axis. This means that in 80% of all cases, Rally will choose an id within the most recent 10%, i.e. between 90 and 100. With 20% probability the id will be between 1 and 89. The closer recency gets to zero, the “flatter” the red curve gets and the more likely Rally will choose less recent ids.

You can also explore the recency calculation interactively.

Example:

{

"name": "index-append",

"operation-type": "bulk",

"bulk-size": 5000

}

Throughput will be reported as number of indexed documents per second.

Meta-data#

The following meta-data are always returned:

index: name of the affected index. May benullif it could not be derived.weight: operation-agnostic representation of the bulk size denoted inunit.unit: The unit in which to interpretweight.success: A boolean indicating whether the bulk request has succeeded.success-count: Number of successfully processed bulk items for this request. This value will only be determined in case of errors or the bulk-size has been specified in docs.error-count: Number of failed bulk items for this request.took: Value of the thetookproperty in the bulk response.

If detailed-results is true the following meta-data are returned in addition:

ops: A nested document with the operation name as key (e.g.index,update,delete) and various counts as values.item-countcontains the total number of items for this key. Additionally, we return a separate counter for each result (indicating e.g. the number of created items, the number of deleted items etc.).shards_histogram: An array of hashes where each hash has two keys:item-countcontains the number of items to which a shard distribution applies andshardscontains another hash with the actual distribution oftotal,successfulandfailedshards (see examples below).bulk-request-size-bytes: Total size of the bulk request body in bytes.total-document-size-bytes: Total size of all documents within the bulk request body in bytes.

Examples

If detailed-results is false a typical return value is:

{

"index": "my_index",

"weight": 5000,

"unit": "docs",

"success": True,

"success-count": 5000,

"error-count": 0,

"took": 20

}

Whereas the response will look as follow if there are bulk errors:

{

"index": "my_index",

"weight": 5000,

"unit": "docs",

"success": False,

"success-count": 4000,

"error-count": 1000,

"took": 20

}

If detailed-results is true a typical return value is:

{

"index": "my_index",

"weight": 5000,

"unit": "docs",

"bulk-request-size-bytes": 2250000,

"total-document-size-bytes": 2000000,

"success": True,

"success-count": 5000,

"error-count": 0,

"took": 20,

"ops": {

"index": {

"item-count": 5000,

"created": 5000

}

},

"shards_histogram": [

{

"item-count": 5000,

"shards": {

"total": 2,

"successful": 2,

"failed": 0

}

}

]

}

An example error response may look like this:

{

"index": "my_index",

"weight": 5000,

"unit": "docs",

"bulk-request-size-bytes": 2250000,

"total-document-size-bytes": 2000000,

"success": False,

"success-count": 4000,

"error-count": 1000,

"took": 20,

"ops": {

"index": {

"item-count": 5000,

"created": 4000,

"noop": 1000

}

},

"shards_histogram": [

{

"item-count": 4000,

"shards": {

"total": 2,

"successful": 2,

"failed": 0

}

},

{

"item-count": 500,

"shards": {

"total": 2,

"successful": 1,

"failed": 1

}

},

{

"item-count": 500,

"shards": {

"total": 2,

"successful": 0,

"failed": 2

}

}

]

}

force-merge#

With the operation type force-merge you can call the force merge API.

Properties#

index(optional, defaults to the indices defined in theindicessection or the data streams defined in thedata-streamssection. If neither are defined defaults to_all.): The name of the index or data stream that should be force-merged.mode(optional, default toblocking): In the defaultblockingmode the Elasticsearch client blocks until the operation returns or times out as dictated by the client-options. In mode polling the client timeout is ignored. Instead, the api call is given 1s to complete. If the operation has not finished, the operator will poll everypoll-perioduntil all force merges are complete.poll-period(defaults to 10s): Only applicable ifmodeis set topolling. Determines the internal at which a check is performed that all force merge operations are complete.max-num-segments(optional) The number of segments the index should be merged into. Defaults to simply checking if a merge needs to execute, and if so, executes it.

This is an administrative operation. Metrics are not reported by default. If reporting is forced by setting include-in-reporting to true, then throughput is reported as the number of completed force-merge operations per second.

Meta-data#

This operation returns no meta-data.

index-stats#

With the operation type index-stats you can call the index stats API.

Properties#

index(optional, defaults to _all): An index pattern that defines which indices should be targeted by this operation.condition(optional, defaults to no condition): A structured object with the propertiespathandexpected-value. If the actual value returned by index stats API is equal to the expected value at the provided path, this operation will return successfully. See below for an example how this can be used.

In the following example the index-stats operation will wait until all segments have been merged:

{

"operation-type": "index-stats",

"index": "_all",

"condition": {

"path": "_all.total.merges.current",

"expected-value": 0

},

"retry-until-success": true

}

Throughput will be reported as number of completed index-stats operations per second.

This operation is retryable.

Meta-data#

weight: Always 1.unit: Always “ops”.success: A boolean indicating whether the operation has succeeded.

node-stats#

With the operation type nodes-stats you can execute nodes stats API. It does not support any parameters.

Throughput will be reported as number of completed node-stats operations per second.

Meta-data#

This operation returns no meta-data.

search#

With the operation type search you can execute request body searches.

Properties#

index(optional): An index pattern that defines which indices or data streams should be targeted by this query. Only needed if theindicesordata-streamssection contains more than one index or data stream respectively. Otherwise, Rally will automatically derive the index or data stream to use. If you have defined multiple indices or data streams and want to query all of them, just specify"index": "_all".type(optional): Defines the type within the specified index for this query. By default, notypewill be used and the query will be performed across all types in the provided index. Also, types have been removed in Elasticsearch 7.0.0 so you must not specify this property if you want to benchmark Elasticsearch 7.0.0 or later.cache(optional): Whether to use the query request cache. By default, Rally will define no value thus the default depends on the benchmark candidate settings and Elasticsearch version. When Rally is used against Elastic Serverless the default isfalse.request-params(optional): A structure containing arbitrary request parameters. The supported parameters names are documented in the Search URI Request docs.Note

Parameters that are implicitly set by Rally (e.g.

bodyorrequest_cache) are not supported (i.e. you should not try to set them and if so expect unspecified behavior).Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

body(mandatory): The query body.response-compression-enabled(optional, defaults totrue): Allows to disable HTTP compression of responses. As these responses are sometimes large and decompression may be a bottleneck on the client, it is possible to turn off response compression.detailed-results(optional, defaults tofalse): Records more detailed meta-data about queries. As it analyzes the corresponding response in more detail, this might incur additional overhead which can skew measurement results. This flag is ineffective for scroll queries.pages(optional, deprecated): Number of pages to retrieve. If this parameter is present, a scroll query will be executed. If you want to retrieve all result pages, use the value “all”. This parameter is deprecated and will be replaced with thescroll-searchoperation in a future release.results-per-page(optional): Number of documents to retrieve per page. This maps to the Search API’ssizeparameter, and can be used for scroll and non-scroll searches. Defaults to10

Example:

{

"name": "default",

"operation-type": "search",

"body": {

"query": {

"match_all": {}

}

},

"request-params": {

"_source_include": "some_field",

"analyze_wildcard": "false"

}

}

For scroll queries, throughput will be reported as number of retrieved pages per second (pages/s). The rationale is that each HTTP request corresponds to one operation and we need to issue one HTTP request per result page.

For other queries, throughput will be reported as number of search requests per second (ops/s).

Note that if you use a dedicated Elasticsearch metrics store, you can also use other request-level meta-data such as the number of hits for your own analyses.

Meta-data#

The following meta data are always returned:

weight: “weight” of an operation. Always 1 for regular queries and the number of retrieved pages for scroll queries.unit: The unit in which to interpretweight. Always “ops” for regular queries and “pages” for scroll queries.success: A boolean indicating whether the query has succeeded.

If detailed-results is true the following meta-data are returned in addition:

hits: Total number of hits for this query.hits_relation: whetherhitsis accurate (eq) or a lower bound of the actual hit count (gte).timed_out: Whether the query has timed out. For scroll queries, this flag istrueif the flag wastruefor any of the queries issued.took: Value of the thetookproperty in the query response. For scroll queries, this value is the sum of alltookvalues in query responses.

paginated-search#

With the operation type paginated-search you can execute paginated searches, specifically using the search_after mechanism.

Properties#

index(optional): An index pattern that defines which indices or data streams should be targeted by this query. Only needed if theindicesordata-streamssection contains more than one index or data stream respectively. Otherwise, Rally will automatically derive the index or data stream to use. If you have defined multiple indices or data streams and want to query all of them, just specify"index": "_all".cache(optional): Whether to use the query request cache. By default, Rally will define no value thus the default depends on the benchmark candidate settings and Elasticsearch version. When Rally is used against Elastic Serverless the default isfalse.request-params(optional): A structure containing arbitrary request parameters. The supported parameters names are documented in the Search URI Request docs.Note

Parameters that are implicitly set by Rally (e.g.

bodyorrequest_cache) are not supported (i.e. you should not try to set them and if so expect unspecified behavior).Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

body(mandatory): The query body.pages(mandatory): Number of pages to retrieve (at most) for this search. If a query yields fewer results than the specified number of pages we will terminate earlier. To retrieve all result pages, use the value “all”.results-per-page(optional): Number of results to retrieve per page. This maps to the Search API’ssizeparameter, and can be used for paginated and non-paginated searches. Defaults to10with-point-in-time-from(optional): Thenameof anopen-point-in-timeoperation. Causes the search to use the generated point in time.Note

This parameter requires usage of a

compositeoperation containing both theopen-point-in-timetask and this search.response-compression-enabled(optional, defaults totrue): Allows to disable HTTP compression of responses. As these responses are sometimes large and decompression may be a bottleneck on the client, it is possible to turn off response compression.

Example:

{

"name": "default",

"operation-type": "paginated-search",

"pages": 10,

"body": {

"query": {

"match_all": {}

}

},

"request-params": {

"_source_include": "some_field",

"analyze_wildcard": "false"

}

}

Note

See also the close-point-in-time operation for a larger example.

Throughput will be reported as number of retrieved pages per second (pages/s). The rationale is that each HTTP request corresponds to one operation and we need to issue one HTTP request per result page. Note that if you use a dedicated Elasticsearch metrics store, you can also use other request-level meta-data such as the number of hits for your own analyses.

Meta-data#

weight: “weight” of an operation, in this case the number of retrieved pages.unit: The unit in which to interpretweight, in this casepages.success: A boolean indicating whether the query has succeeded.hits: Total number of hits for this query.hits_relation: whetherhitsis accurate (eq) or a lower bound of the actual hit count (gte).timed_out: Whether any of the issued queries has timed out.took: The sum of alltookvalues in query responses.

scroll-search#

With the operation type scroll-search you can execute scroll-based searches.

Properties#

index(optional): An index pattern that defines which indices or data streams should be targeted by this query. Only needed if theindicesordata-streamssection contains more than one index or data stream respectively. Otherwise, Rally will automatically derive the index or data stream to use. If you have defined multiple indices or data streams and want to query all of them, just specify"index": "_all".type(optional): Defines the type within the specified index for this query. By default, notypewill be used and the query will be performed across all types in the provided index. Also, types have been removed in Elasticsearch 7.0.0 so you must not specify this property if you want to benchmark Elasticsearch 7.0.0 or later.cache(optional): Whether to use the query request cache. By default, Rally will define no value thus the default depends on the benchmark candidate settings and Elasticsearch version. When Rally is used against Elastic Serverless the default isfalse.request-params(optional): A structure containing arbitrary request parameters. The supported parameters names are documented in the Search URI Request docs.Note

Parameters that are implicitly set by Rally (e.g.

bodyorrequest_cache) are not supported (i.e. you should not try to set them and if so expect unspecified behavior).Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

body(mandatory): The query body.response-compression-enabled(optional, defaults totrue): Allows to disable HTTP compression of responses. As these responses are sometimes large and decompression may be a bottleneck on the client, it is possible to turn off response compression.pages(mandatory): Number of pages to retrieve (at most) for this search. If a query yields fewer results than the specified number of pages we will terminate earlier. To retrieve all result pages, use the value “all”.results-per-page(optional): Number of results to retrieve per page.

Example:

{

"name": "default",

"operation-type": "scroll-search",

"pages": 10,

"body": {

"query": {

"match_all": {}

}

},

"request-params": {

"_source_include": "some_field",

"analyze_wildcard": "false"

}

}

Throughput will be reported as number of retrieved pages per second (pages/s). The rationale is that each HTTP request corresponds to one operation and we need to issue one HTTP request per result page. Note that if you use a dedicated Elasticsearch metrics store, you can also use other request-level meta-data such as the number of hits for your own analyses.

Meta-data#

weight: “weight” of an operation, in this case the number of retrieved pages.unit: The unit in which to interpretweight, in this casepages.success: A boolean indicating whether the query has succeeded.hits: Total number of hits for this query.hits_relation: whetherhitsis accurate (eq) or a lower bound of the actual hit count (gte).timed_out: Whether any of the issued queries has timed out.took: The sum of alltookvalues in query responses.

composite-agg#

The operation type composite-agg allows paginating through composite aggregations.

Properties#

index(optional): An index pattern that defines which indices or data streams should be targeted by this query. Only needed if theindicesordata-streamssection contains more than one index or data stream respectively. Otherwise, Rally will automatically derive the index or data stream to use. If you have defined multiple indices or data streams and want to query all of them, just specify"index": "_all".cache(optional): Whether to use the query request cache. By default, Rally will define no value thus the default depends on the benchmark candidate settings and Elasticsearch version. When Rally is used against Elastic Serverless the default isfalse.request-params(optional): A structure containing arbitrary request parameters. The supported parameters names are documented in the Search URI Request docs.Note

Parameters that are implicitly set by Rally (e.g.

bodyorrequest_cache) are not supported (i.e. you should not try to set them and if so expect unspecified behavior).Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

body(mandatory): The query body.pages(optional): Number of pages to retrieve (at most) for this search. If the composite aggregation yields fewer results than the specified number of pages we will terminate earlier. To retrieve all result pages, use the value “all”. Defaults to “all”results-per-page(optional): Number of results to retrieve per page. This maps to the composite aggregation’s API’ssizeparameter.with-point-in-time-from(optional): Thenameof anopen-point-in-timeoperation. Causes the search to use the generated point in time.Note

This parameter requires usage of a

compositeoperation containing both theopen-point-in-timetask and this search.response-compression-enabled(optional, defaults totrue): Allows to disable HTTP compression of responses. As these responses are sometimes large and decompression may be a bottleneck on the client, it is possible to turn off response compression.

Example:

{

"name": "default",

"operation-type": "composite-agg",

"pages": 10,

"body": {

"aggs": {

"my_buckets": {

"composite": {

"sources": [

{ "date": { "date_histogram": { "field": "timestamp", "calendar_interval": "1d" } } },

{ "product": { "terms": { "field": "product" } } }

]

}

}

}

},

"request-params": {

"_source_include": "some_field",

"analyze_wildcard": "false"

}

}

Throughput will be reported as number of retrieved pages per second (pages/s). The rationale is that each HTTP request corresponds to one operation and we need to issue one HTTP request per result page. Note that if you use a dedicated Elasticsearch metrics store, you can also use other request-level meta-data such as the number of hits for your own analyses.

Meta-data#

weight: “weight” of an operation, in this case the number of retrieved pages.unit: The unit in which to interpretweight, in this casepages.success: A boolean indicating whether the query has succeeded.hits: Total number of hits for this query.hits_relation: whetherhitsis accurate (eq) or a lower bound of the actual hit count (gte).timed_out: Whether any of the issued queries has timed out.took: The sum of alltookvalues in query responses.

put-pipeline#

With the operation-type put-pipeline you can execute the put pipeline API.

Properties#

id(mandatory): Pipeline id.body(mandatory): Pipeline definition.

In this example we setup a pipeline that adds location information to a ingested document as well as a pipeline failure block to change the index in which the document was supposed to be written. Note that we need to use the raw and endraw blocks to ensure that Rally does not attempt to resolve the Mustache template. See template language for more information.

Example:

{

"name": "define-ip-geocoder",

"operation-type": "put-pipeline",

"id": "ip-geocoder",

"body": {

"description": "Extracts location information from the client IP.",

"processors": [

{

"geoip": {

"field": "clientip",

"properties": [

"city_name",

"country_iso_code",

"country_name",

"location"

]

}

}

],

"on_failure": [

{

"set": {

"field": "_index",

{% raw %}

"value": "failed-{{ _index }}"

{% endraw %}

}

}

]

}

}

Please see the pipeline documentation for details on handling failures in pipelines.

This example requires that the ingest-geoip Elasticsearch plugin is installed.

This is an administrative operation. Metrics are not reported by default. Reporting can be forced by setting include-in-reporting to true.

This operation is retryable.

Meta-data#

This operation returns no meta-data.

put-settings#

With the operation-type put-settings you can execute the cluster update settings API.

Properties#

body(mandatory): The cluster settings to apply.

Example:

{

"name": "increase-watermarks",

"operation-type": "put-settings",

"body": {

"transient" : {

"cluster.routing.allocation.disk.watermark.low" : "95%",

"cluster.routing.allocation.disk.watermark.high" : "97%",

"cluster.routing.allocation.disk.watermark.flood_stage" : "99%"

}

}

}

This is an administrative operation. Metrics are not reported by default. Reporting can be forced by setting include-in-reporting to true.

This operation is retryable.

Meta-data#

This operation returns no meta-data.

cluster-health#

With the operation cluster-health you can execute the cluster health API.

Properties#

request-params(optional): A structure containing any request parameters that are allowed by the cluster health API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).index(optional): The name of the index that should be used to check.

The cluster-health operation will check whether the expected cluster health and will report a failure if this is not the case. Use --on-error on the command line to control Rally’s behavior in case of such failures.

Example:

{

"name": "check-cluster-green",

"operation-type": "cluster-health",

"index": "logs-*",

"request-params": {

"wait_for_status": "green",

"wait_for_no_relocating_shards": "true"

},

"retry-until-success": true

}

This is an administrative operation. Metrics are not reported by default. Reporting can be forced by setting include-in-reporting to true.

This operation is retryable.

Meta-data#

weight: Always 1.unit: Always “ops”.success: A boolean indicating whether the operation has succeeded.cluster-status: Current cluster status.relocating-shards: The number of currently relocating shards.

refresh#

With the operation refresh you can execute the refresh API.

Properties#

index(optional, defaults to_all): The name of the index or data stream that should be refreshed.

This is an administrative operation. Metrics are not reported by default. Reporting can be forced by setting include-in-reporting to true.

This operation is retryable.

Meta-data#

This operation returns no meta-data.

create-index#

With the operation create-index you can execute the create index API. It supports two modes: it creates either all indices that are specified in the track’s indices section or it creates one specific index defined by this operation.

Properties#

If you want it to create all indices that have been declared in the indices section you can specify the following properties:

settings(optional): Allows to specify additional index settings that will be merged with the index settings specified in the body of the index in theindicessection.request-params(optional): A structure containing any request parameters that are allowed by the create index API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

If you want it to create one specific index instead, you can specify the following properties:

index(mandatory): One or more names of the indices that should be created. If only one index should be created, you can use a string otherwise this needs to be a list of strings.body(optional): The body for the create index API call.request-params(optional): A structure containing any request parameters that are allowed by the create index API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

Examples

The following snippet will create all indices that have been defined in the indices section. It will reuse all settings defined but override the number of shards:

{

"name": "create-all-indices",

"operation-type": "create-index",

"settings": {

"index.number_of_shards": 1

},

"request-params": {

"wait_for_active_shards": "true"

}

}

With the following snippet we will create a new index that is not defined in the indices section. Note that we specify the index settings directly in the body:

{

"name": "create-an-index",

"operation-type": "create-index",

"index": "people",

"body": {

"settings": {

"index.number_of_shards": 0

},

"mappings": {

"docs": {

"properties": {

"name": {

"type": "text"

}

}

}

}

}

}

Note

Types have been removed in Elasticsearch 7.0.0. If you want to benchmark Elasticsearch 7.0.0 or later you need to remove the mapping type above.

This is an administrative operation. Metrics are not reported by default. Reporting can be forced by setting include-in-reporting to true.

This operation is retryable.

Meta-data#

weight: The number of indices that have been created.unit: Always “ops”.success: A boolean indicating whether the operation has succeeded.

delete-index#

With the operation delete-index you can execute the delete index API. It supports two modes: it deletes either all indices that are specified in the track’s indices section or it deletes one specific index (pattern) defined by this operation.

Properties#

If you want it to delete all indices that have been declared in the indices section, you can specify the following properties:

only-if-exists(optional, defaults totrue): Defines whether an index should only be deleted if it exists.request-params(optional): A structure containing any request parameters that are allowed by the delete index API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

If you want it to delete one specific index (pattern) instead, you can specify the following properties:

index(mandatory): One or more names of the indices that should be deleted. If only one index should be deleted, you can use a string otherwise this needs to be a list of strings.only-if-exists(optional, defaults totrue): Defines whether an index should only be deleted if it exists.request-params(optional): A structure containing any request parameters that are allowed by the delete index API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

Examples

With the following snippet we will delete all indices that are declared in the indices section but only if they existed previously (implicit default):

{

"name": "delete-all-indices",

"operation-type": "delete-index"

}

With the following snippet we will delete all logs-* indices:

{

"name": "delete-logs",

"operation-type": "delete-index",

"index": "logs-*",

"only-if-exists": false,

"request-params": {

"expand_wildcards": "all",

"allow_no_indices": "true",

"ignore_unavailable": "true"

}

}

This is an administrative operation. Metrics are not reported by default. Reporting can be forced by setting include-in-reporting to true.

This operation is retryable.

Meta-data#

weight: The number of indices that have been deleted.unit: Always “ops”.success: A boolean indicating whether the operation has succeeded.

create-ilm-policy#

With the create-ilm-policy operation you can create or update (if the policy already exists) an ILM policy.

Properties#

policy-name(mandatory): The identifier for the policy.body(mandatory): The ILM policy body.request-params(optional): A structure containing any request parameters that are allowed by the create or update lifecycle policy API. Rally will not attempt to serialize the parameters and pass them as is.

Example

In this example, we create an ILM policy (my-ilm-policy) with specific request-params defined:

{

"schedule": [

{

"operation": {

"operation-type": "create-ilm-policy",

"policy-name": "my-ilm-policy",

"request-params": {

"master_timeout": "30s",

"timeout": "30s"

},

"body": {

"policy": {

"phases": {

"warm": {

"min_age": "10d",

"actions": {

"forcemerge": {

"max_num_segments": 1

}

}

},

"delete": {

"min_age": "30d",

"actions": {

"delete": {}

}

}

}

}

}

}

}

]

}

Meta-data#

weight: The number of indices that have been deleted.unit: Always “ops”.success: A boolean indicating whether the operation has succeeded.

delete-ilm-policy#

With the delete-ilm-policy operation you can delete an ILM policy.

Properties#

policy-name(mandatory): The identifier for the policy.request-params(optional): A structure containing any request parameters that are allowed by the create or update lifecycle policy API. Rally will not attempt to serialize the parameters and pass them as is.

Example

In this example, we delete an ILM policy (my-ilm-policy) with specific request-params defined:

{

"schedule": [

{

"operation": {

"operation-type": "delete-ilm-policy",

"policy-name": "my-ilm-policy",

"request-params": {

"master_timeout": "30s",

"timeout": "30s"

}

}

}

]

}

Meta-data#

weight: The number of indices that have been deleted.unit: Always “ops”.success: A boolean indicating whether the operation has succeeded.

create-data-stream#

With the operation create-data-stream you can execute the create data stream API. It supports two modes: it creates either all data streams that are specified in the track’s data-streams section or it creates one specific data stream defined by this operation.

Properties#

If you want it to create all data streams that have been declared in the data-streams section you can specify the following properties:

request-params(optional): A structure containing any request parameters that are allowed by the create data stream API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

If you want it to create one specific data stream instead, you can specify the following properties:

data-stream(mandatory): One or more names of the data streams that should be created. If only one data stream should be created, you can use a string otherwise this needs to be a list of strings.request-params(optional): A structure containing any request parameters that are allowed by the create index API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

Examples

The following snippet will create all data streams that have been defined in the data-streams section:

{

"name": "create-all-data-streams",

"operation-type": "create-data-stream",

"request-params": {

"wait_for_active_shards": "true"

}

}

With the following snippet we will create a new data stream that is not defined in the data-streams section:

{

"name": "create-a-data-stream",

"operation-type": "create-data-stream",

"data-stream": "people"

}

This is an administrative operation. Metrics are not reported by default. Reporting can be forced by setting include-in-reporting to true.

This operation is retryable.

Meta-data#

weight: The number of data streams that have been created.unit: Always “ops”.success: A boolean indicating whether the operation has succeeded.

delete-data-stream#

With the operation delete-data-stream you can execute the delete data stream API. It supports two modes: it deletes either all data streams that are specified in the track’s data-streams section or it deletes one specific data stream (pattern) defined by this operation.

Properties#

If you want it to delete all data streams that have been declared in the data-streams section, you can specify the following properties:

only-if-exists(optional, defaults totrue): Defines whether a data stream should only be deleted if it exists.request-params(optional): A structure containing any request parameters that are allowed by the delete index API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

If you want it to delete one specific data stream (pattern) instead, you can specify the following properties:

data-stream(mandatory): One or more names of the data streams that should be deleted. If only one data stream should be deleted, you can use a string otherwise this needs to be a list of strings.only-if-exists(optional, defaults totrue): Defines whether a data stream should only be deleted if it exists.request-params(optional): A structure containing any request parameters that are allowed by the delete data stream API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

Examples

With the following snippet we will delete all data streams that are declared in the data-streams section but only if they existed previously (implicit default):

{

"name": "delete-all-data-streams",

"operation-type": "delete-data-stream"

}

With the following snippet we will delete all ds-logs-* data streams:

{

"name": "delete-data-streams",

"operation-type": "delete-data-stream",

"data-stream": "ds-logs-*",

"only-if-exists": false

}

This is an administrative operation. Metrics are not reported by default. Reporting can be forced by setting include-in-reporting to true.

This operation is retryable.

Meta-data#

weight: The number of data streams that have been deleted.unit: Always “ops”.success: A boolean indicating whether the operation has succeeded.

create-composable-template#

With the operation create-composable-template you can execute the create index template API. It supports two modes: it creates either all templates that are specified in the track’s composable-templates section or it creates one specific template defined by this operation.

Properties#

If you want it to create templates that have been declared in the composable-templates section you can specify the following properties:

template(optional): If you specify a template name, only the template with this name will be created.settings(optional): Allows to specify additional settings that will be merged with the settings specified in the body of the template in thecomposable-templatessection.request-params(optional): A structure containing any request parameters that are allowed by the create template API. Rally will not attempt to serialize the parameters and pass them as is. Always use “true” / “false” strings for boolean parameters (see example below).

If you want it to create one specific template instead, you can specify the following properties: